|

Theory of Learning |

|

|

|

|

|

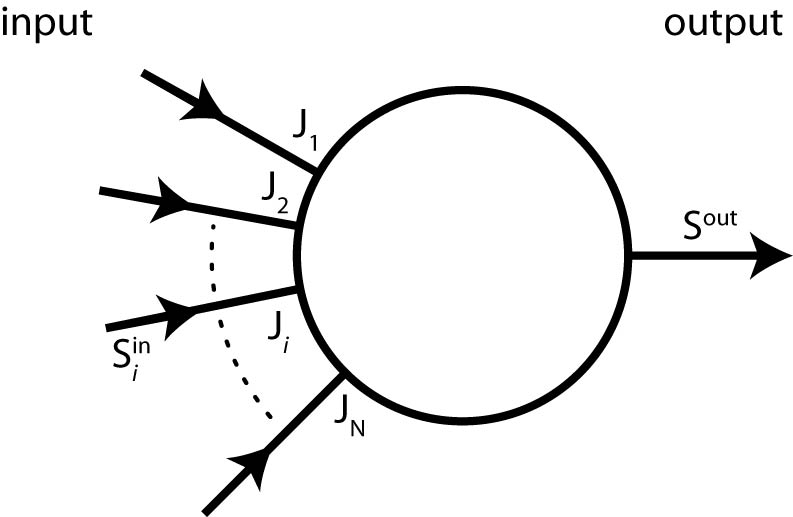

Processing of sensory data is not achieved by single neurons, but by large networks of these strongly interacting, non-linear elements. Interesting systems are therefore characterized by a large number of degrees of freedom. Nonetheless they can, in principle, be treated analytically exact or numerically. Evidently, techniques such as those used in non-equilibrium statistical mechanics, non-linear dynamics and bifurcation theory are helpful in analyzing such a system. One single neuron in a neural network can be treated as threshold element. Thus, it fires (which means creating an action potential), if the potential at the axon hillock reaches a certain level. The resulting action potential propagates along the axon, an active cable with synapses at its end. The efficacy (strength) of a synapse decides to which degree the signal is transmitted to the post-synaptic neuron and the status of the neural network is changed. Consequently, information is encoded by manipulating the efficacies of synapses. In 1949, Hebb formulated a neurophysiologic learning rule. According to this rule the manipulation of a synapse depends on the connected neurons: the pre- and the postsynaptic neuron. Hebb's rule has been successfully applied in neural networks. Our group actively participated in the development of new neuronal models (Spike-Response-Neuron). Further, we have formulated learning rules that can be used to understand spatio-temporal pattern formation in neuronal networks.

Our learning rule has been applied to several biological systems such as the barn owl and Xenopus. In these systems, the learning rule was used to establish sensory maps. References

last modified 2007-11-05 by webmaster@Franosch.org |

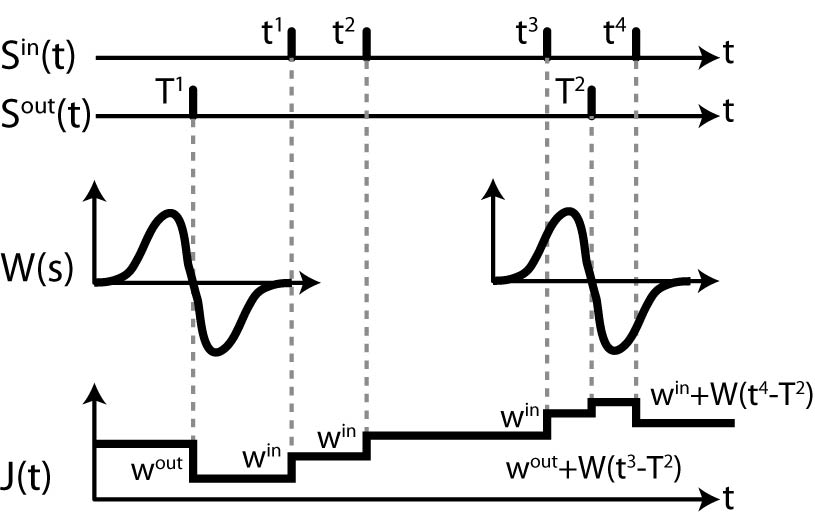

Due to the above learning rule, a strengthening of a synapse occurs if the postsynaptic neuron fires shortly after the incoming presynaptic neuron. In this case the synapse succeeded in creating an action potential in the postsynaptic cell. In the opposite case, which is that the postsynaptic neuron fires before the presyaptic one, the synapse is "punished". Obviously, it had no influence on the creation of the action potential. A mathematical formulation of the change of synaptic weights (J) is given by the function in the figure to the right, called the "learning window" (W).

Due to the above learning rule, a strengthening of a synapse occurs if the postsynaptic neuron fires shortly after the incoming presynaptic neuron. In this case the synapse succeeded in creating an action potential in the postsynaptic cell. In the opposite case, which is that the postsynaptic neuron fires before the presyaptic one, the synapse is "punished". Obviously, it had no influence on the creation of the action potential. A mathematical formulation of the change of synaptic weights (J) is given by the function in the figure to the right, called the "learning window" (W).